Introduction to R and RStudio

Overview

Teaching: 45 min

Exercises: 10 minQuestions

How to find your way around RStudio?

How to interact with R?

How to manage your environment?

How to install packages?

Objectives

Describe the purpose and use of each pane in the RStudio IDE

Locate buttons and options in the RStudio IDE

Define a variable

Assign data to a variable

Manage a workspace in an interactive R session

Use mathematical and comparison operators

Call functions

Manage packages

Motivation

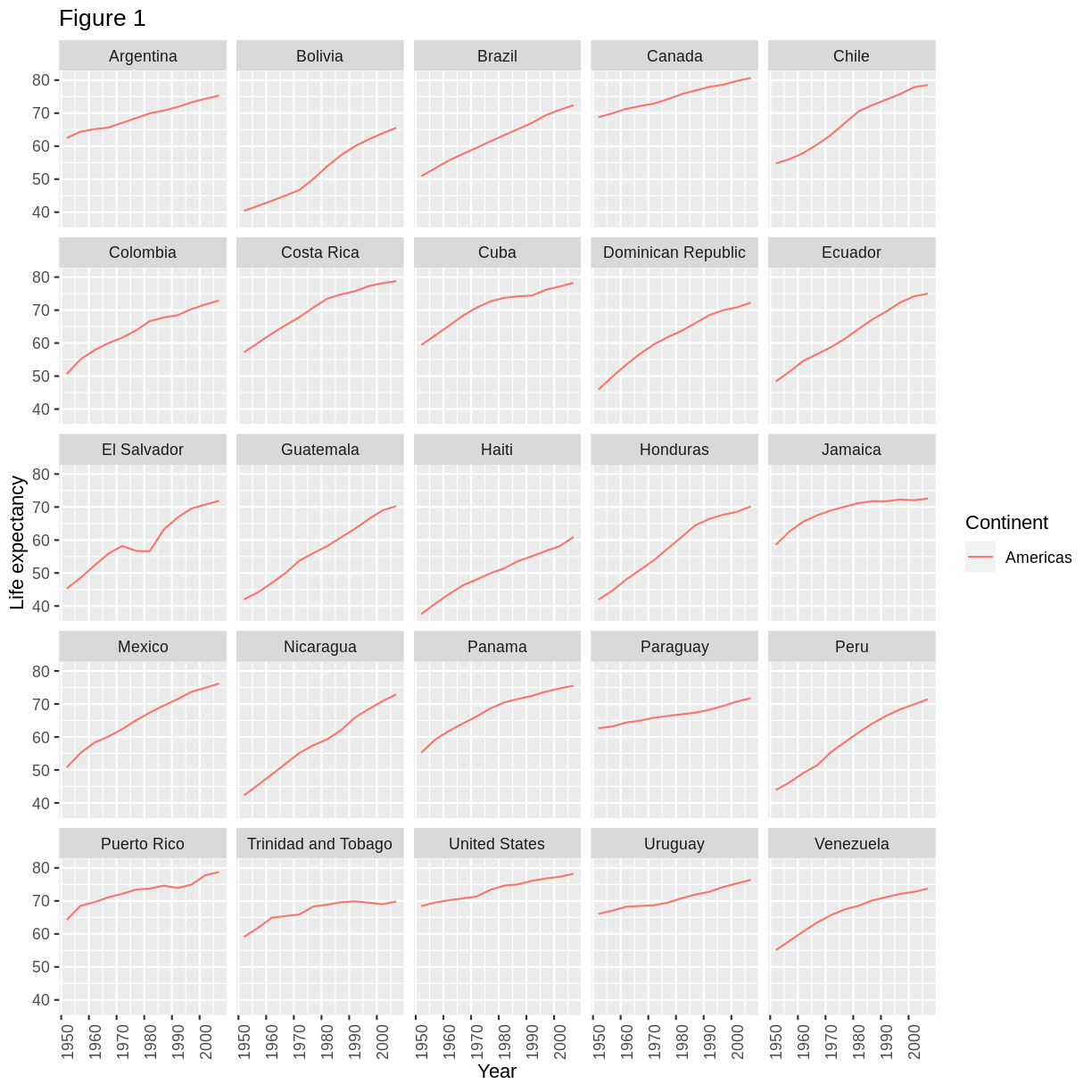

Science is a multi-step process: once you’ve designed an experiment and collected data, the real fun begins! This lesson will teach you how to start this process using R and RStudio. We will begin with raw data, perform exploratory analyses, and learn how to plot results graphically. This example starts with a dataset from gapminder.org containing population information for many countries through time. Can you read the data into R? Can you plot the population for Senegal? Can you calculate the average income for countries on the continent of Asia? By the end of these lessons you will be able to do things like plot the populations for all of these countries in under a minute!

Before Starting The Workshop

Please ensure you have the latest version of R and RStudio installed on your machine. This is important, as some packages used in the workshop may not install correctly (or at all) if R is not up to date.

Introduction to RStudio

Welcome to the R portion of the Software Carpentry workshop.

Throughout this lesson, we’re going to teach you some of the fundamentals of the R language as well as some best practices for organizing code for scientific projects that will make your life easier.

We’ll be using RStudio: a free, open source R Integrated Development Environment (IDE). It provides a built-in editor, works on all platforms (including on servers) and provides many advantages such as integration with version control and project management.

Basic layout

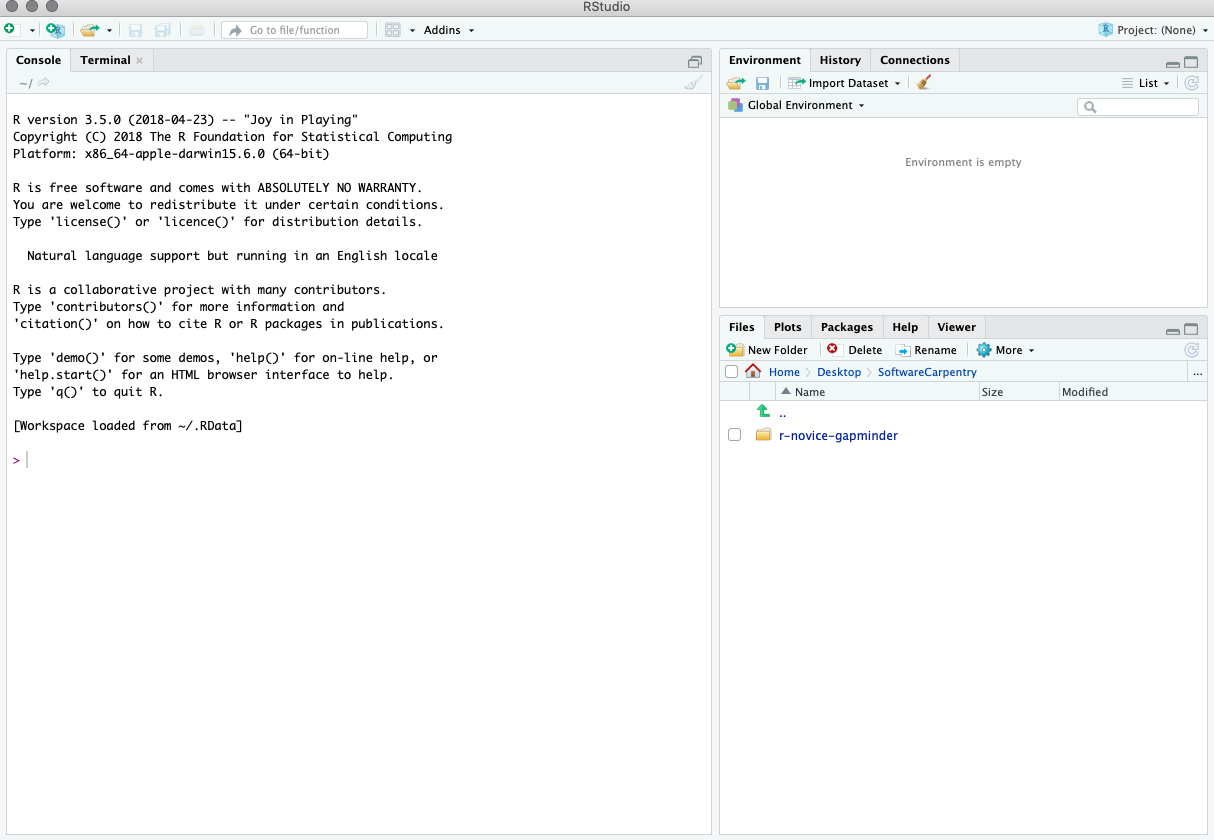

When you first open RStudio, you will be greeted by three panels:

- The interactive R console/Terminal (entire left)

- Environment/History/Connections (tabbed in upper right)

- Files/Plots/Packages/Help/Viewer (tabbed in lower right)

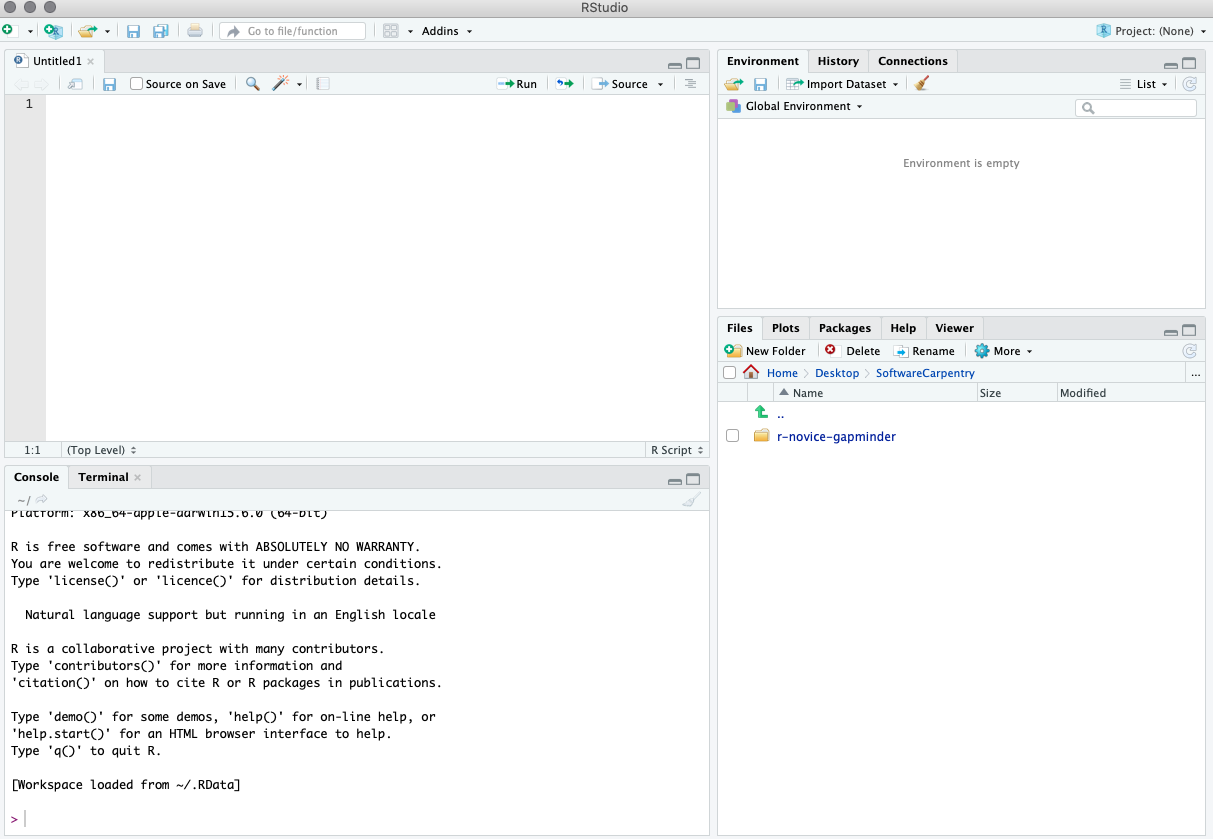

Once you open files, such as R scripts, an editor panel will also open in the top left.

Work flow within RStudio

There are two main ways one can work within RStudio:

- Test and play within the interactive R console then copy code into

a .R file to run later.

- This works well when doing small tests and initially starting off.

- It quickly becomes laborious

- Start writing in a .R file and use RStudio’s short cut keys for the Run command

to push the current line, selected lines or modified lines to the

interactive R console.

- This is a great way to start; all your code is saved for later

- You will be able to run the file you create from within RStudio

or using R’s

source()function.

Tip: Running segments of your code

RStudio offers you great flexibility in running code from within the editor window. There are buttons, menu choices, and keyboard shortcuts. To run the current line, you can

- click on the

Runbutton above the editor panel, or- select “Run Lines” from the “Code” menu, or

- hit Ctrl+Return in Windows or Linux or ⌘+Return on OS X. (This shortcut can also be seen by hovering the mouse over the button). To run a block of code, select it and then

Run. If you have modified a line of code within a block of code you have just run, there is no need to reselect the section andRun, you can use the next button along,Re-run the previous region. This will run the previous code block including the modifications you have made.

Introduction to R

Much of your time in R will be spent in the R interactive

console. This is where you will run all of your code, and can be a

useful environment to try out ideas before adding them to an R script

file. This console in RStudio is the same as the one you would get if

you typed in R in your command-line environment.

The first thing you will see in the R interactive session is a bunch of information, followed by a “>” and a blinking cursor. In many ways this is similar to the shell environment you learned about during the shell lessons: it operates on the same idea of a “Read, evaluate, print loop”: you type in commands, R tries to execute them, and then returns a result.

Using R as a calculator

The simplest thing you could do with R is to do arithmetic:

1 + 100

[1] 101

And R will print out the answer, with a preceding “[1]”. Don’t worry about this for now, we’ll explain that later. For now think of it as indicating output.

Like bash, if you type in an incomplete command, R will wait for you to complete it:

> 1 +

+

Any time you hit return and the R session shows a “+” instead of a “>”, it means it’s waiting for you to complete the command. If you want to cancel a command you can hit Esc and RStudio will give you back the “>” prompt.

Tip: Canceling commands

If you’re using R from the command line instead of from within RStudio, you need to use Ctrl+C instead of Esc to cancel the command. This applies to Mac users as well!

Canceling a command isn’t only useful for killing incomplete commands: you can also use it to tell R to stop running code (for example if it’s taking much longer than you expect), or to get rid of the code you’re currently writing.

When using R as a calculator, the order of operations is the same as you would have learned back in school.

From highest to lowest precedence:

- Parentheses:

(,) - Exponents:

^or** - Multiply:

* - Divide:

/ - Add:

+ - Subtract:

-

3 + 5 * 2

[1] 13

Use parentheses to group operations in order to force the order of evaluation if it differs from the default, or to make clear what you intend.

(3 + 5) * 2

[1] 16

This can get unwieldy when not needed, but clarifies your intentions. Remember that others may later read your code.

(3 + (5 * (2 ^ 2))) # hard to read

3 + 5 * 2 ^ 2 # clear, if you remember the rules

3 + 5 * (2 ^ 2) # if you forget some rules, this might help

The text after each line of code is called a

“comment”. Anything that follows after the hash (or octothorpe) symbol

# is ignored by R when it executes code.

Really small or large numbers get a scientific notation:

2/10000

[1] 2e-04

Which is shorthand for “multiplied by 10^XX”. So 2e-4

is shorthand for 2 * 10^(-4).

You can write numbers in scientific notation too:

5e3 # Note the lack of minus here

[1] 5000

Mathematical functions

R has many built in mathematical functions. To call a function, we can type its name, followed by open and closing parentheses. Anything we type inside the parentheses is called the function’s arguments:

sin(1) # trigonometry functions

[1] 0.841471

log(1) # natural logarithm

[1] 0

log10(10) # base-10 logarithm

[1] 1

exp(0.5) # e^(1/2)

[1] 1.648721

Don’t worry about trying to remember every function in R. You can look them up on Google, or if you can remember the start of the function’s name, use the tab completion in RStudio.

This is one advantage that RStudio has over R on its own, it has auto-completion abilities that allow you to more easily look up functions, their arguments, and the values that they take.

Typing a ? before the name of a command will open the help page

for that command. When using RStudio, this will open the ‘Help’ pane;

if using R in the terminal, the help page will open in your browser.

The help page will include a detailed description of the command and

how it works. Scrolling to the bottom of the help page will usually

show a collection of code examples which illustrate command usage.

We’ll go through an example later.

Comparing things

We can also do comparisons in R:

1 == 1 # equality (note two equals signs, read as "is equal to")

[1] TRUE

1 != 2 # inequality (read as "is not equal to")

[1] TRUE

1 < 2 # less than

[1] TRUE

1 <= 1 # less than or equal to

[1] TRUE

1 > 0 # greater than

[1] TRUE

1 >= -9 # greater than or equal to

[1] TRUE

Tip: Comparing Numbers

A word of warning about comparing numbers: you should never use

==to compare two numbers unless they are integers (a data type which can specifically represent only whole numbers).Computers may only represent decimal numbers with a certain degree of precision, so two numbers which look the same when printed out by R, may actually have different underlying representations and therefore be different by a small margin of error (called Machine numeric tolerance).

Instead you should use the

all.equalfunction.Further reading: http://floating-point-gui.de/

Variables and assignment

We can store values in variables using the assignment operator <-, like this:

x <- 1/40

Notice that assignment does not print a value. Instead, we stored it for later

in something called a variable. x now contains the value 0.025:

x

[1] 0.025

More precisely, the stored value is a decimal approximation of this fraction called a floating point number.

Look for the Environment tab in the top right panel of RStudio, and you will see that x and its value

have appeared. Our variable x can be used in place of a number in any calculation that expects a number:

log(x)

[1] -3.688879

Notice also that variables can be reassigned:

x <- 100

x used to contain the value 0.025 and now it has the value 100.

Assignment values can contain the variable being assigned to:

x <- x + 1 #notice how RStudio updates its description of x on the top right tab

y <- x * 2

The right hand side of the assignment can be any valid R expression. The right hand side is fully evaluated before the assignment occurs.

Variable names can contain letters, numbers, underscores and periods but no spaces. They must start with a letter or a period followed by a letter (they cannot start with a number nor an underscore). Variables beginning with a period are hidden variables. Different people use different conventions for long variable names, these include

- periods.between.words

- underscores_between_words

- camelCaseToSeparateWords

What you use is up to you, but be consistent.

It is also possible to use the = operator for assignment:

x = 1/40

But this is much less common among R users. The most important thing is to

be consistent with the operator you use. There are occasionally places

where it is less confusing to use <- than =, and it is the most common

symbol used in the community. So the recommendation is to use <-.

Challenge 1

Which of the following are valid R variable names?

min_height max.height _age .mass MaxLength min-length 2widths celsius2kelvinSolution to challenge 1

The following can be used as R variables:

min_height max.height MaxLength celsius2kelvinThe following creates a hidden variable:

.massThe following will not be able to be used to create a variable

_age min-length 2widths

Vectorization

One final thing to be aware of is that R is vectorized, meaning that variables and functions can have vectors as values. In contrast to physics and mathematics, a vector in R describes a set of values in a certain order of the same data type. For example

1:5

[1] 1 2 3 4 5

2^(1:5)

[1] 2 4 8 16 32

x <- 1:5

2^x

[1] 2 4 8 16 32

This is incredibly powerful; we will discuss this further in an upcoming lesson.

Managing your environment

There are a few useful commands you can use to interact with the R session.

ls will list all of the variables and functions stored in the global environment

(your working R session):

ls()

[1] "args" "dest_md" "op" "src_rmd" "x" "y"

Tip: hidden objects

Like in the shell,

lswill hide any variables or functions starting with a “.” by default. To list all objects, typels(all.names=TRUE)instead

Note here that we didn’t give any arguments to ls, but we still

needed to give the parentheses to tell R to call the function.

If we type ls by itself, R prints a bunch of code instead of a listing of objects.

ls

function (name, pos = -1L, envir = as.environment(pos), all.names = FALSE,

pattern, sorted = TRUE)

{

if (!missing(name)) {

pos <- tryCatch(name, error = function(e) e)

if (inherits(pos, "error")) {

name <- substitute(name)

if (!is.character(name))

name <- deparse(name)

warning(gettextf("%s converted to character string",

sQuote(name)), domain = NA)

pos <- name

}

}

all.names <- .Internal(ls(envir, all.names, sorted))

if (!missing(pattern)) {

if ((ll <- length(grep("[", pattern, fixed = TRUE))) &&

ll != length(grep("]", pattern, fixed = TRUE))) {

if (pattern == "[") {

pattern <- "\\["

warning("replaced regular expression pattern '[' by '\\\\['")

}

else if (length(grep("[^\\\\]\\[<-", pattern))) {

pattern <- sub("\\[<-", "\\\\\\[<-", pattern)

warning("replaced '[<-' by '\\\\[<-' in regular expression pattern")

}

}

grep(pattern, all.names, value = TRUE)

}

else all.names

}

<bytecode: 0x559cb3713ef0>

<environment: namespace:base>

What’s going on here?

Like everything in R, ls is the name of an object, and entering the name of

an object by itself prints the contents of the object. The object x that we

created earlier contains 1, 2, 3, 4, 5:

x

[1] 1 2 3 4 5

The object ls contains the R code that makes the ls function work! We’ll talk

more about how functions work and start writing our own later.

You can use rm to delete objects you no longer need:

rm(x)

If you have lots of things in your environment and want to delete all of them,

you can pass the results of ls to the rm function:

rm(list = ls())

In this case we’ve combined the two. Like the order of operations, anything inside the innermost parentheses is evaluated first, and so on.

In this case we’ve specified that the results of ls should be used for the

list argument in rm. When assigning values to arguments by name, you must

use the = operator!!

If instead we use <-, there will be unintended side effects, or you may get an error message:

rm(list <- ls())

Error in rm(list <- ls()): ... must contain names or character strings

Tip: Warnings vs. Errors

Pay attention when R does something unexpected! Errors, like above, are thrown when R cannot proceed with a calculation. Warnings on the other hand usually mean that the function has run, but it probably hasn’t worked as expected.

In both cases, the message that R prints out usually give you clues how to fix a problem.

R Packages

It is possible to add functions to R by writing a package, or by obtaining a package written by someone else. As of this writing, there are over 10,000 packages available on CRAN (the comprehensive R archive network). R and RStudio have functionality for managing packages:

- You can see what packages are installed by typing

installed.packages() - You can install packages by typing

install.packages("packagename"), wherepackagenameis the package name, in quotes. - You can update installed packages by typing

update.packages() - You can remove a package with

remove.packages("packagename") - You can make a package available for use with

library(packagename)

Packages can also be viewed, loaded, and detached in the Packages tab of the lower right panel in RStudio. Clicking on this tab will display all of the installed packages with a checkbox next to them. If the box next to a package name is checked, the package is loaded and if it is empty, the package is not loaded. Click an empty box to load that package and click a checked box to detach that package.

Packages can be installed and updated from the Package tab with the Install and Update buttons at the top of the tab.

Challenge 2

What will be the value of each variable after each statement in the following program?

mass <- 47.5 age <- 122 mass <- mass * 2.3 age <- age - 20Solution to challenge 2

mass <- 47.5This will give a value of 47.5 for the variable mass

age <- 122This will give a value of 122 for the variable age

mass <- mass * 2.3This will multiply the existing value of 47.5 by 2.3 to give a new value of 109.25 to the variable mass.

age <- age - 20This will subtract 20 from the existing value of 122 to give a new value of 102 to the variable age.

Challenge 3

Run the code from the previous challenge, and write a command to compare mass to age. Is mass larger than age?

Solution to challenge 3

One way of answering this question in R is to use the

>to set up the following:mass > age[1] TRUEThis should yield a boolean value of TRUE since 109.25 is greater than 102.

Challenge 4

Clean up your working environment by deleting the mass and age variables.

Solution to challenge 4

We can use the

rmcommand to accomplish this taskrm(age, mass)

Challenge 5

Install the following packages:

ggplot2,plyr,gapminderSolution to challenge 5

We can use the

install.packages()command to install the required packages.install.packages("ggplot2") install.packages("plyr") install.packages("gapminder")An alternate solution, to install multiple packages with a single

install.packages()command is:install.packages(c("ggplot2", "plyr", "gapminder"))

Key Points

Use RStudio to write and run R programs.

R has the usual arithmetic operators and mathematical functions.

Use

<-to assign values to variables.Use

ls()to list the variables in a program.Use

rm()to delete objects in a program.Use

install.packages()to install packages (libraries).

Project Management With RStudio

Overview

Teaching: 20 min

Exercises: 10 minQuestions

How can I manage my projects in R?

Objectives

Create self-contained projects in RStudio

Introduction

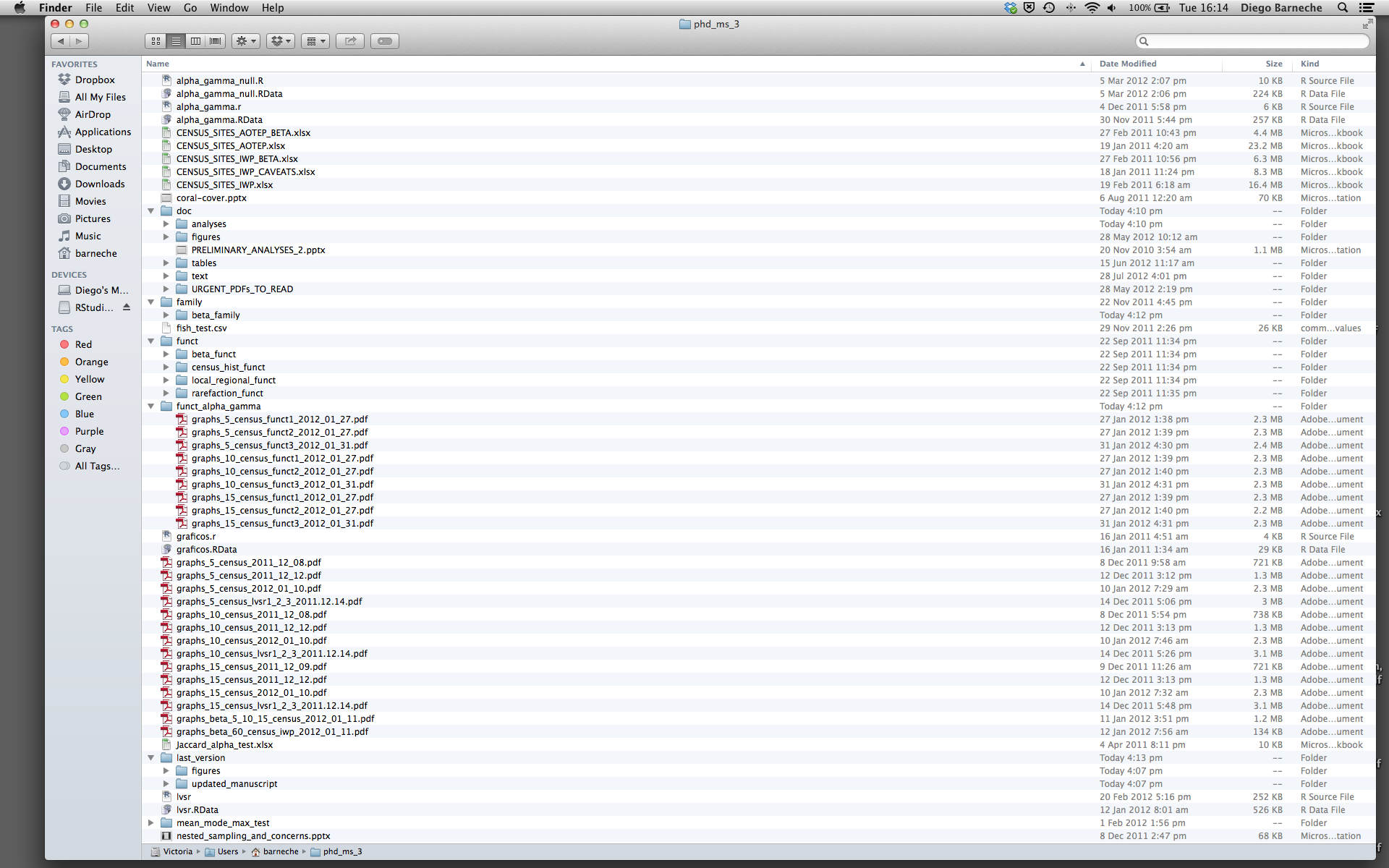

The scientific process is naturally incremental, and many projects start life as random notes, some code, then a manuscript, and eventually everything is a bit mixed together.

Managing your projects in a reproducible fashion doesn't just make your science reproducible, it makes your life easier.

— Vince Buffalo (@vsbuffalo) April 15, 2013

Most people tend to organize their projects like this:

There are many reasons why we should ALWAYS avoid this:

- It is really hard to tell which version of your data is the original and which is the modified;

- It gets really messy because it mixes files with various extensions together;

- It probably takes you a lot of time to actually find things, and relate the correct figures to the exact code that has been used to generate it;

A good project layout will ultimately make your life easier:

- It will help ensure the integrity of your data;

- It makes it simpler to share your code with someone else (a lab-mate, collaborator, or supervisor);

- It allows you to easily upload your code with your manuscript submission;

- It makes it easier to pick the project back up after a break.

A possible solution

Fortunately, there are tools and packages which can help you manage your work effectively.

One of the most powerful and useful aspects of RStudio is its project management functionality. We’ll be using this today to create a self-contained, reproducible project.

Challenge 1: Creating a self-contained project

We’re going to create a new project in RStudio:

- Click the “File” menu button, then “New Project”.

- Click “New Directory”.

- Click “New Project”.

- Type in the name of the directory to store your project, e.g. “my_project”.

- If available, select the checkbox for “Create a git repository.”

- Click the “Create Project” button.

The simplest way to open an RStudio project once it has been created is to click

through your file system to get to the directory where it was saved and double

click on the .Rproj file. This will open RStudio and start your R session in the

same directory as the .Rproj file. All your data, plots and scripts will now be

relative to the project directory. RStudio projects have the added benefit of

allowing you to open multiple projects at the same time each open to its own

project directory. This allows you to keep multiple projects open without them

interfering with each other.

Challenge 2: Opening an RStudio project through the file system

- Exit RStudio.

- Navigate to the directory where you created a project in Challenge 1.

- Double click on the

.Rprojfile in that directory.

Best practices for project organization

Although there is no “best” way to lay out a project, there are some general principles to adhere to that will make project management easier:

Treat data as read only

This is probably the most important goal of setting up a project. Data is typically time consuming and/or expensive to collect. Working with them interactively (e.g., in Excel) where they can be modified means you are never sure of where the data came from, or how it has been modified since collection. It is therefore a good idea to treat your data as “read-only”.

Data Cleaning

In many cases your data will be “dirty”: it will need significant preprocessing to get into a format R (or any other programming language) will find useful. This task is sometimes called “data munging”. Storing these scripts in a separate folder, and creating a second “read-only” data folder to hold the “cleaned” data sets can prevent confusion between the two sets.

Treat generated output as disposable

Anything generated by your scripts should be treated as disposable: it should all be able to be regenerated from your scripts.

There are lots of different ways to manage this output. Having an output folder with different sub-directories for each separate analysis makes it easier later. Since many analyses are exploratory and don’t end up being used in the final project, and some of the analyses get shared between projects.

Tip: Good Enough Practices for Scientific Computing

Good Enough Practices for Scientific Computing gives the following recommendations for project organization:

- Put each project in its own directory, which is named after the project.

- Put text documents associated with the project in the

docdirectory.- Put raw data and metadata in the

datadirectory, and files generated during cleanup and analysis in aresultsdirectory.- Put source for the project’s scripts and programs in the

srcdirectory, and programs brought in from elsewhere or compiled locally in thebindirectory.- Name all files to reflect their content or function.

Separate function definition and application

One of the more effective ways to work with R is to start by writing the code you want to run directly in a .R script, and then running the selected lines (either using the keyboard shortcuts in RStudio or clicking the “Run” button) in the interactive R console.

When your project is in its early stages, the initial .R script file usually contains many lines of directly executed code. As it matures, reusable chunks get pulled into their own functions. It’s a good idea to separate these functions into two separate folders; one to store useful functions that you’ll reuse across analyses and projects, and one to store the analysis scripts.

Tip: avoiding duplication

You may find yourself using data or analysis scripts across several projects. Typically you want to avoid duplication to save space and avoid having to make updates to code in multiple places.

In this case, making “symbolic links”, which are essentially shortcuts to files somewhere else on a filesystem, can let you use existing code without having to move or copy it. Plus, any changes made to that code will only have to be made once.

On Linux and OS X you can use the

ln -scommand, and on Windows you can either create a shortcut or use themklinkcommand from the windows terminal.

Save the data in the data directory

Now we have a good directory structure we will now place/save the data file in the data/ directory.

Challenge 3

Download the gapminder data from here.

- Download the file (right mouse click on the link above -> “Save link as” / “Save file as”, or click on the link and after the page loads, press Ctrl+S or choose File -> “Save page as”)

- Make sure it’s saved under the name

gapminder_data.csv- Save the file in the

data/folder within your project.We will load and inspect these data later.

Challenge 4

It is useful to get some general idea about the dataset, directly from the command line, before loading it into R. Understanding the dataset better will come in handy when making decisions on how to load it in R. Use the command-line shell to answer the following questions:

- What is the size of the file?

- How many rows of data does it contain?

- What kinds of values are stored in this file?

Solution to Challenge 4

By running these commands in the shell:

ls -lh data/gapminder_data.csv-rw-r--r-- 1 runner docker 80K Jul 8 22:13 data/gapminder_data.csvThe file size is 80K.

wc -l data/gapminder_data.csv1705 data/gapminder_data.csvThere are 1705 lines. The data looks like:

head data/gapminder_data.csvcountry,year,pop,continent,lifeExp,gdpPercap Afghanistan,1952,8425333,Asia,28.801,779.4453145 Afghanistan,1957,9240934,Asia,30.332,820.8530296 Afghanistan,1962,10267083,Asia,31.997,853.10071 Afghanistan,1967,11537966,Asia,34.02,836.1971382 Afghanistan,1972,13079460,Asia,36.088,739.9811058 Afghanistan,1977,14880372,Asia,38.438,786.11336 Afghanistan,1982,12881816,Asia,39.854,978.0114388 Afghanistan,1987,13867957,Asia,40.822,852.3959448 Afghanistan,1992,16317921,Asia,41.674,649.3413952

Tip: command line in RStudio

The Terminal tab in the console pane provides a convenient place directly within RStudio to interact directly with the command line.

Version Control

It is important to use version control with projects. Go here for a good lesson which describes using Git with RStudio.

Key Points

Use RStudio to create and manage projects with consistent layout.

Treat raw data as read-only.

Treat generated output as disposable.

Separate function definition and application.

Seeking Help

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How can I get help in R?

Objectives

To be able read R help files for functions and special operators.

To be able to use CRAN task views to identify packages to solve a problem.

To be able to seek help from your peers.

Reading Help files

R, and every package, provide help files for functions. The general syntax to search for help on any function, “function_name”, from a specific function that is in a package loaded into your namespace (your interactive R session):

?function_name

help(function_name)

This will load up a help page in RStudio (or as plain text in R by itself).

Each help page is broken down into sections:

- Description: An extended description of what the function does.

- Usage: The arguments of the function and their default values.

- Arguments: An explanation of the data each argument is expecting.

- Details: Any important details to be aware of.

- Value: The data the function returns.

- See Also: Any related functions you might find useful.

- Examples: Some examples for how to use the function.

Different functions might have different sections, but these are the main ones you should be aware of.

Tip: Running Examples

From within the function help page, you can highlight code in the Examples and hit Ctrl+Return to run it in RStudio console. This is gives you a quick way to get a feel for how a function works.

Tip: Reading help files

One of the most daunting aspects of R is the large number of functions available. It would be prohibitive, if not impossible to remember the correct usage for every function you use. Luckily, the help files mean you don’t have to!

Special Operators

To seek help on special operators, use quotes or backticks:

?"<-"

?`<-`

Getting help on packages

Many packages come with “vignettes”: tutorials and extended example documentation.

Without any arguments, vignette() will list all vignettes for all installed packages;

vignette(package="package-name") will list all available vignettes for

package-name, and vignette("vignette-name") will open the specified vignette.

If a package doesn’t have any vignettes, you can usually find help by typing

help("package-name").

RStudio also has a set of excellent cheatsheets for many packages.

When you kind of remember the function

If you’re not sure what package a function is in, or how it’s specifically spelled you can do a fuzzy search:

??function_name

A fuzzy search is when you search for an approximate string match. For example, you may remember that the function to set your working directory includes “set” in its name. You can do a fuzzy search to help you identify the function:

??set

When you have no idea where to begin

If you don’t know what function or package you need to use CRAN Task Views is a specially maintained list of packages grouped into fields. This can be a good starting point.

When your code doesn’t work: seeking help from your peers

If you’re having trouble using a function, 9 times out of 10,

the answers you are seeking have already been answered on

Stack Overflow. You can search using

the [r] tag. Please make sure to see their page on

how to ask a good question.

If you can’t find the answer, there are a few useful functions to help you ask a question from your peers:

?dput

Will dump the data you’re working with into a format so that it can be copy and pasted by anyone else into their R session.

sessionInfo()

R version 4.1.0 (2021-05-18)

Platform: x86_64-pc-linux-gnu (64-bit)

Running under: Ubuntu 20.04.2 LTS

Matrix products: default

BLAS: /usr/lib/x86_64-linux-gnu/blas/libblas.so.3.9.0

LAPACK: /usr/lib/x86_64-linux-gnu/lapack/liblapack.so.3.9.0

locale:

[1] LC_CTYPE=C.UTF-8 LC_NUMERIC=C LC_TIME=C.UTF-8

[4] LC_COLLATE=C.UTF-8 LC_MONETARY=C.UTF-8 LC_MESSAGES=C.UTF-8

[7] LC_PAPER=C.UTF-8 LC_NAME=C LC_ADDRESS=C

[10] LC_TELEPHONE=C LC_MEASUREMENT=C.UTF-8 LC_IDENTIFICATION=C

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] knitr_1.33

loaded via a namespace (and not attached):

[1] compiler_4.1.0 magrittr_2.0.1 tools_4.1.0 stringi_1.6.2 stringr_1.4.0

[6] xfun_0.24 evaluate_0.14

Will print out your current version of R, as well as any packages you have loaded. This can be useful for others to help reproduce and debug your issue.

Challenge 1

Look at the help for the

cfunction. What kind of vector do you expect you will create if you evaluate the following:c(1, 2, 3) c('d', 'e', 'f') c(1, 2, 'f')Solution to Challenge 1

The

c()function creates a vector, in which all elements are the same type. In the first case, the elements are numeric, in the second, they are characters, and in the third they are characters: the numeric values are “coerced” to be characters.

Challenge 2

Look at the help for the

pastefunction. You’ll need to use this later. What is the difference between thesepandcollapsearguments?Solution to Challenge 2

To look at the help for the

paste()function, use:help("paste") ?pasteThe difference between

sepandcollapseis a little tricky. Thepastefunction accepts any number of arguments, each of which can be a vector of any length. Thesepargument specifies the string used between concatenated terms — by default, a space. The result is a vector as long as the longest argument supplied topaste. In contrast,collapsespecifies that after concatenation the elements are collapsed together using the given separator, the result being a single string. e.g.paste(c("a","b"), "c")[1] "a c" "b c"paste(c("a","b"), "c", sep = ",")[1] "a,c" "b,c"paste(c("a","b"), "c", collapse = "|")[1] "a c|b c"paste(c("a","b"), "c", sep = ",", collapse = "|")[1] "a,c|b,c"(For more information, scroll to the bottom of the

?pastehelp page and look at the examples, or tryexample('paste').)

Challenge 3

Use help to find a function (and its associated parameters) that you could use to load data from a tabular file in which columns are delimited with “\t” (tab) and the decimal point is a “.” (period). This check for decimal separator is important, especially if you are working with international colleagues, because different countries have different conventions for the decimal point (i.e. comma vs period). hint: use

??"read table"to look up functions related to reading in tabular data.Solution to Challenge 3

The standard R function for reading tab-delimited files with a period decimal separator is read.delim(). You can also do this with

read.table(file, sep="\t")(the period is the default decimal separator forread.table(), although you may have to change thecomment.charargument as well if your data file contains hash (#) characters

Other ports of call

Key Points

Use

help()to get online help in R.

Data Structures

Overview

Teaching: 40 min

Exercises: 15 minQuestions

How can I read data in R?

What are the basic data types in R?

How do I represent categorical information in R?

Objectives

To be able to identify the 5 main data types.

To begin exploring data frames, and understand how they are related to vectors, factors and lists.

To be able to ask questions from R about the type, class, and structure of an object.

One of R’s most powerful features is its ability to deal with tabular data -

such as you may already have in a spreadsheet or a CSV file. Let’s start by

making a toy dataset in your data/ directory, called feline-data.csv:

cats <- data.frame(coat = c("calico", "black", "tabby"),

weight = c(2.1, 5.0, 3.2),

likes_string = c(1, 0, 1))

write.csv(x = cats, file = "data/feline-data.csv", row.names = FALSE)

The contents of the new file, feline-data.csv:

coat,weight,likes_string

calico,2.1,1

black,5.0,0

tabby,3.2,1

Tip: Editing Text files in R

Alternatively, you can create

data/feline-data.csvusing a text editor (Nano), or within RStudio with the File -> New File -> Text File menu item.

We can load this into R via the following:

cats <- read.csv(file = "data/feline-data.csv", stringsAsFactors = TRUE)

cats

coat weight likes_string

1 calico 2.1 1

2 black 5.0 0

3 tabby 3.2 1

The read.table function is used for reading in tabular data stored in a text

file where the columns of data are separated by punctuation characters such as

CSV files (csv = comma-separated values). Tabs and commas are the most common

punctuation characters used to separate or delimit data points in csv files.

For convenience R provides 2 other versions of read.table. These are: read.csv

for files where the data are separated with commas and read.delim for files

where the data are separated with tabs. Of these three functions read.csv is

the most commonly used. If needed it is possible to override the default

delimiting punctuation marks for both read.csv and read.delim.

We can begin exploring our dataset right away, pulling out columns by specifying

them using the $ operator:

cats$weight

[1] 2.1 5.0 3.2

cats$coat

[1] calico black tabby

Levels: black calico tabby

We can do other operations on the columns:

## Say we discovered that the scale weighs two Kg light:

cats$weight + 2

[1] 4.1 7.0 5.2

paste("My cat is", cats$coat)

[1] "My cat is calico" "My cat is black" "My cat is tabby"

But what about

cats$weight + cats$coat

Warning in Ops.factor(cats$weight, cats$coat): '+' not meaningful for factors

[1] NA NA NA

Understanding what happened here is key to successfully analyzing data in R.

Data Types

If you guessed that the last command will return an error because 2.1 plus

"black" is nonsense, you’re right - and you already have some intuition for an

important concept in programming called data types. We can ask what type of

data something is:

typeof(cats$weight)

[1] "double"

There are 5 main types: double, integer, complex, logical and character.

typeof(3.14)

[1] "double"

typeof(1L) # The L suffix forces the number to be an integer, since by default R uses float numbers

[1] "integer"

typeof(1+1i)

[1] "complex"

typeof(TRUE)

[1] "logical"

typeof('banana')

[1] "character"

No matter how complicated our analyses become, all data in R is interpreted as one of these basic data types. This strictness has some really important consequences.

A user has added details of another cat. This information is in the file

data/feline-data_v2.csv.

file.show("data/feline-data_v2.csv")

coat,weight,likes_string

calico,2.1,1

black,5.0,0

tabby,3.2,1

tabby,2.3 or 2.4,1

Load the new cats data like before, and check what type of data we find in the

weight column:

cats <- read.csv(file="data/feline-data_v2.csv", stringsAsFactors = TRUE)

typeof(cats$weight)

[1] "integer"

Oh no, our weights aren’t the double type anymore! If we try to do the same math we did on them before, we run into trouble:

cats$weight + 2

Warning in Ops.factor(cats$weight, 2): '+' not meaningful for factors

[1] NA NA NA NA

What happened?

The cats data we are working with is something called a data frame. Data frames

are one of the most common and versatile types of data structures we will work with in R.

In this example, the columns that make up the data frame cannot be composed of different data types.

In this case, R does not read everything in the data frame as a double, therefore the entire

column data type changes to something that is suitable for everything in the column.

When R reads a csv file, it reads it in as a data frame. Thus, when we loaded the cats

csv file, it is stored as a data frame. We can check this by using the function class().

class(cats)

[1] "data.frame"

Data frames are composed of rows and columns, where each column has the same number of rows. Different columns in a data frame can be made up of different data types (this is what makes them so versatile), but everything in a given column needs to be the same type (e.g., vector, factor, or list).

Let’s explore more about different data structures and how they behave. For now, let’s remove that extra line from our cats data and reload it, while we investigate this behavior further:

feline-data.csv:

coat,weight,likes_string

calico,2.1,1

black,5.0,0

tabby,3.2,1

And back in RStudio:

cats <- read.csv(file="data/feline-data.csv", stringsAsFactors = TRUE)

Vectors and Type Coercion

To better understand this behavior, let’s meet another of the data structures: the vector.

my_vector <- vector(length = 3)

my_vector

[1] FALSE FALSE FALSE

A vector in R is essentially an ordered list of things, with the special

condition that everything in the vector must be the same basic data type. If

you don’t choose the datatype, it’ll default to logical; or, you can declare

an empty vector of whatever type you like.

another_vector <- vector(mode='character', length=3)

another_vector

[1] "" "" ""

You can check if something is a vector:

str(another_vector)

chr [1:3] "" "" ""

The somewhat cryptic output from this command indicates the basic data type

found in this vector - in this case chr, character; an indication of the

number of things in the vector - actually, the indexes of the vector, in this

case [1:3]; and a few examples of what’s actually in the vector - in this case

empty character strings. If we similarly do

str(cats$weight)

num [1:3] 2.1 5 3.2

we see that cats$weight is a vector, too - the columns of data we load into R

data.frames are all vectors, and that’s the root of why R forces everything in

a column to be the same basic data type.

Discussion 1

Why is R so opinionated about what we put in our columns of data? How does this help us?

Discussion 1

By keeping everything in a column the same, we allow ourselves to make simple assumptions about our data; if you can interpret one entry in the column as a number, then you can interpret all of them as numbers, so we don’t have to check every time. This consistency is what people mean when they talk about clean data; in the long run, strict consistency goes a long way to making our lives easier in R.

You can also make vectors with explicit contents with the combine function:

combine_vector <- c(2,6,3)

combine_vector

[1] 2 6 3

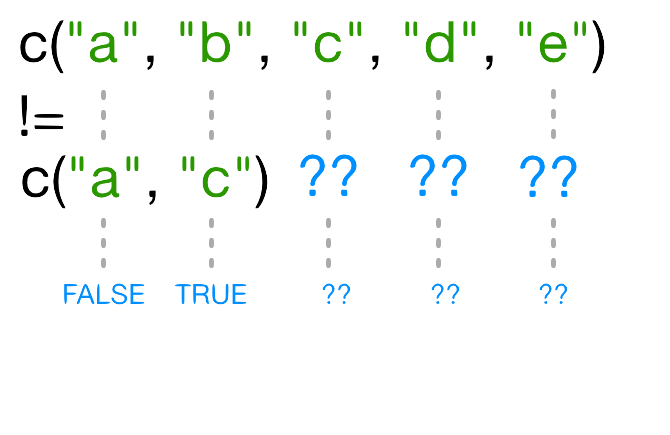

Given what we’ve learned so far, what do you think the following will produce?

quiz_vector <- c(2,6,'3')

This is something called type coercion, and it is the source of many surprises and the reason why we need to be aware of the basic data types and how R will interpret them. When R encounters a mix of types (here numeric and character) to be combined into a single vector, it will force them all to be the same type. Consider:

coercion_vector <- c('a', TRUE)

coercion_vector

[1] "a" "TRUE"

another_coercion_vector <- c(0, TRUE)

another_coercion_vector

[1] 0 1

The coercion rules go: logical -> integer -> numeric -> complex ->

character, where -> can be read as are transformed into. You can try to

force coercion against this flow using the as. functions:

character_vector_example <- c('0','2','4')

character_vector_example

[1] "0" "2" "4"

character_coerced_to_numeric <- as.numeric(character_vector_example)

character_coerced_to_numeric

[1] 0 2 4

numeric_coerced_to_logical <- as.logical(character_coerced_to_numeric)

numeric_coerced_to_logical

[1] FALSE TRUE TRUE

As you can see, some surprising things can happen when R forces one basic data type into another! Nitty-gritty of type coercion aside, the point is: if your data doesn’t look like what you thought it was going to look like, type coercion may well be to blame; make sure everything is the same type in your vectors and your columns of data.frames, or you will get nasty surprises!

But coercion can also be very useful! For example, in our cats data

likes_string is numeric, but we know that the 1s and 0s actually represent

TRUE and FALSE (a common way of representing them). We should use the

logical datatype here, which has two states: TRUE or FALSE, which is

exactly what our data represents. We can ‘coerce’ this column to be logical by

using the as.logical function:

cats$likes_string

[1] 1 0 1

cats$likes_string <- as.logical(cats$likes_string)

cats$likes_string

[1] TRUE FALSE TRUE

The combine function, c(), will also append things to an existing vector:

ab_vector <- c('a', 'b')

ab_vector

[1] "a" "b"

combine_example <- c(ab_vector, 'SWC')

combine_example

[1] "a" "b" "SWC"

You can also make series of numbers:

mySeries <- 1:10

mySeries

[1] 1 2 3 4 5 6 7 8 9 10

seq(10)

[1] 1 2 3 4 5 6 7 8 9 10

seq(1,10, by=0.1)

[1] 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 1.8 1.9 2.0 2.1 2.2 2.3 2.4

[16] 2.5 2.6 2.7 2.8 2.9 3.0 3.1 3.2 3.3 3.4 3.5 3.6 3.7 3.8 3.9

[31] 4.0 4.1 4.2 4.3 4.4 4.5 4.6 4.7 4.8 4.9 5.0 5.1 5.2 5.3 5.4

[46] 5.5 5.6 5.7 5.8 5.9 6.0 6.1 6.2 6.3 6.4 6.5 6.6 6.7 6.8 6.9

[61] 7.0 7.1 7.2 7.3 7.4 7.5 7.6 7.7 7.8 7.9 8.0 8.1 8.2 8.3 8.4

[76] 8.5 8.6 8.7 8.8 8.9 9.0 9.1 9.2 9.3 9.4 9.5 9.6 9.7 9.8 9.9

[91] 10.0

We can ask a few questions about vectors:

sequence_example <- seq(10)

head(sequence_example, n=2)

[1] 1 2

tail(sequence_example, n=4)

[1] 7 8 9 10

length(sequence_example)

[1] 10

class(sequence_example)

[1] "integer"

typeof(sequence_example)

[1] "integer"

Finally, you can give names to elements in your vector:

my_example <- 5:8

names(my_example) <- c("a", "b", "c", "d")

my_example

a b c d

5 6 7 8

names(my_example)

[1] "a" "b" "c" "d"

Challenge 1

Start by making a vector with the numbers 1 through 26. Multiply the vector by 2, and give the resulting vector names A through Z (hint: there is a built in vector called

LETTERS)Solution to Challenge 1

x <- 1:26 x <- x * 2 names(x) <- LETTERS

Data Frames

We said that columns in data.frames were vectors:

str(cats$weight)

num [1:3] 2.1 5 3.2

str(cats$likes_string)

logi [1:3] TRUE FALSE TRUE

These make sense. But what about

str(cats$coat)

Factor w/ 3 levels "black","calico",..: 2 1 3

Tip: Renaming data frame columns

Data frames have column names, which can be accessed with the

names()function.names(cats)[1] "coat" "weight" "likes_string"If you want to rename the second column of

cats, you can assign a new name to the second element ofnames(cats).names(cats)[2] <- "weight_kg" catscoat weight_kg likes_string 1 calico 2.1 TRUE 2 black 5.0 FALSE 3 tabby 3.2 TRUE

Factors

Another important data structure is called a factor. Factors usually look like character data, but are typically used to represent categorical information. For example, let’s make a vector of strings labelling cat colorations for all the cats in our study:

coats <- c('tabby', 'tortoiseshell', 'tortoiseshell', 'black', 'tabby')

coats

[1] "tabby" "tortoiseshell" "tortoiseshell" "black"

[5] "tabby"

str(coats)

chr [1:5] "tabby" "tortoiseshell" "tortoiseshell" "black" "tabby"

We can turn a vector into a factor like so:

CATegories <- factor(coats)

class(CATegories)

[1] "factor"

str(CATegories)

Factor w/ 3 levels "black","tabby",..: 2 3 3 1 2

Now R has noticed that there are three possible categories in our data - but it also did something surprising; instead of printing out the strings we gave it, we got a bunch of numbers instead. R has replaced our human-readable categories with numbered indices under the hood, this is necessary as many statistical calculations utilise such numerical representations for categorical data:

typeof(coats)

[1] "character"

typeof(CATegories)

[1] "integer"

Challenge 2

Is there a factor in our

catsdata.frame? what is its name? Try using?read.csvto figure out how to keep text columns as character vectors instead of factors; then write a command or two to show that the factor incatsis actually a character vector when loaded in this way.Solution to Challenge 2

One solution is use the argument

stringAsFactors:cats <- read.csv(file="data/feline-data.csv", stringsAsFactors=FALSE) str(cats$coat)Another solution is use the argument

colClassesthat allow finer control.cats <- read.csv(file="data/feline-data.csv", colClasses=c(NA, NA, "character")) str(cats$coat)Note: new students find the help files difficult to understand; make sure to let them know that this is typical, and encourage them to take their best guess based on semantic meaning, even if they aren’t sure.

In modelling functions, it’s important to know what the baseline levels are. This is assumed to be the first factor, but by default factors are labelled in alphabetical order. You can change this by specifying the levels:

mydata <- c("case", "control", "control", "case")

factor_ordering_example <- factor(mydata, levels = c("control", "case"))

str(factor_ordering_example)

Factor w/ 2 levels "control","case": 2 1 1 2

In this case, we’ve explicitly told R that “control” should be represented by 1, and “case” by 2. This designation can be very important for interpreting the results of statistical models!

Lists

Another data structure you’ll want in your bag of tricks is the list. A list

is simpler in some ways than the other types, because you can put anything you

want in it. Remember everything in the vector must be the same basic data type,

but a list can have different data types:

list_example <- list(1, "a", TRUE, 1+4i)

list_example

[[1]]

[1] 1

[[2]]

[1] "a"

[[3]]

[1] TRUE

[[4]]

[1] 1+4i

another_list <- list(title = "Numbers", numbers = 1:10, data = TRUE )

another_list

$title

[1] "Numbers"

$numbers

[1] 1 2 3 4 5 6 7 8 9 10

$data

[1] TRUE

We can now understand something a bit surprising in our data.frame; what happens if we run:

typeof(cats)

[1] "list"

We see that data.frames look like lists ‘under the hood’ - this is because a

data.frame is really a list of vectors and factors, as they have to be - in

order to hold those columns that are a mix of vectors and factors, the

data.frame needs something a bit more flexible than a vector to put all the

columns together into a familiar table. In other words, a data.frame is a

special list in which all the vectors must have the same length.

In our cats example, we have an integer, a double and a logical variable. As

we have seen already, each column of data.frame is a vector.

cats$coat

[1] calico black tabby

Levels: black calico tabby

cats[,1]

[1] calico black tabby

Levels: black calico tabby

typeof(cats[,1])

[1] "integer"

str(cats[,1])

Factor w/ 3 levels "black","calico",..: 2 1 3

Each row is an observation of different variables, itself a data.frame, and thus can be composed of elements of different types.

cats[1,]

coat weight likes_string

1 calico 2.1 1

typeof(cats[1,])

[1] "list"

str(cats[1,])

'data.frame': 1 obs. of 3 variables:

$ coat : Factor w/ 3 levels "black","calico",..: 2

$ weight : num 2.1

$ likes_string: num 1

Challenge 3

There are several subtly different ways to call variables, observations and elements from data.frames:

cats[1]cats[[1]]cats$coatcats["coat"]cats[1, 1]cats[, 1]cats[1, ]Try out these examples and explain what is returned by each one.

Hint: Use the function

typeof()to examine what is returned in each case.Solution to Challenge 3

cats[1]coat 1 calico 2 black 3 tabbyWe can think of a data frame as a list of vectors. The single brace

[1]returns the first slice of the list, as another list. In this case it is the first column of the data frame.cats[[1]][1] calico black tabby Levels: black calico tabbyThe double brace

[[1]]returns the contents of the list item. In this case it is the contents of the first column, a vector of type factor.cats$coat[1] calico black tabby Levels: black calico tabbyThis example uses the

$character to address items by name. coat is the first column of the data frame, again a vector of type factor.cats["coat"]coat 1 calico 2 black 3 tabbyHere we are using a single brace

["coat"]replacing the index number with the column name. Like example 1, the returned object is a list.cats[1, 1][1] calico Levels: black calico tabbyThis example uses a single brace, but this time we provide row and column coordinates. The returned object is the value in row 1, column 1. The object is an integer but because it is part of a vector of type factor, R displays the label “calico” associated with the integer value.

cats[, 1][1] calico black tabby Levels: black calico tabbyLike the previous example we use single braces and provide row and column coordinates. The row coordinate is not specified, R interprets this missing value as all the elements in this column vector.

cats[1, ]coat weight likes_string 1 calico 2.1 1Again we use the single brace with row and column coordinates. The column coordinate is not specified. The return value is a list containing all the values in the first row.

Matrices

Last but not least is the matrix. We can declare a matrix full of zeros:

matrix_example <- matrix(0, ncol=6, nrow=3)

matrix_example

[,1] [,2] [,3] [,4] [,5] [,6]

[1,] 0 0 0 0 0 0

[2,] 0 0 0 0 0 0

[3,] 0 0 0 0 0 0

And similar to other data structures, we can ask things about our matrix:

class(matrix_example)

[1] "matrix" "array"

typeof(matrix_example)

[1] "double"

str(matrix_example)

num [1:3, 1:6] 0 0 0 0 0 0 0 0 0 0 ...

dim(matrix_example)

[1] 3 6

nrow(matrix_example)

[1] 3

ncol(matrix_example)

[1] 6

Challenge 4

What do you think will be the result of

length(matrix_example)? Try it. Were you right? Why / why not?Solution to Challenge 4

What do you think will be the result of

length(matrix_example)?matrix_example <- matrix(0, ncol=6, nrow=3) length(matrix_example)[1] 18Because a matrix is a vector with added dimension attributes,

lengthgives you the total number of elements in the matrix.

Challenge 5

Make another matrix, this time containing the numbers 1:50, with 5 columns and 10 rows. Did the

matrixfunction fill your matrix by column, or by row, as its default behaviour? See if you can figure out how to change this. (hint: read the documentation formatrix!)Solution to Challenge 5

Make another matrix, this time containing the numbers 1:50, with 5 columns and 10 rows. Did the

matrixfunction fill your matrix by column, or by row, as its default behaviour? See if you can figure out how to change this. (hint: read the documentation formatrix!)x <- matrix(1:50, ncol=5, nrow=10) x <- matrix(1:50, ncol=5, nrow=10, byrow = TRUE) # to fill by row

Challenge 6

Create a list of length two containing a character vector for each of the sections in this part of the workshop:

- Data types

- Data structures

Populate each character vector with the names of the data types and data structures we’ve seen so far.

Solution to Challenge 6

dataTypes <- c('double', 'complex', 'integer', 'character', 'logical') dataStructures <- c('data.frame', 'vector', 'factor', 'list', 'matrix') answer <- list(dataTypes, dataStructures)Note: it’s nice to make a list in big writing on the board or taped to the wall listing all of these types and structures - leave it up for the rest of the workshop to remind people of the importance of these basics.

Challenge 7

Consider the R output of the matrix below:

[,1] [,2] [1,] 4 1 [2,] 9 5 [3,] 10 7What was the correct command used to write this matrix? Examine each command and try to figure out the correct one before typing them. Think about what matrices the other commands will produce.

matrix(c(4, 1, 9, 5, 10, 7), nrow = 3)matrix(c(4, 9, 10, 1, 5, 7), ncol = 2, byrow = TRUE)matrix(c(4, 9, 10, 1, 5, 7), nrow = 2)matrix(c(4, 1, 9, 5, 10, 7), ncol = 2, byrow = TRUE)Solution to Challenge 7

Consider the R output of the matrix below:

[,1] [,2] [1,] 4 1 [2,] 9 5 [3,] 10 7What was the correct command used to write this matrix? Examine each command and try to figure out the correct one before typing them. Think about what matrices the other commands will produce.

matrix(c(4, 1, 9, 5, 10, 7), ncol = 2, byrow = TRUE)

Key Points

Use

read.csvto read tabular data in R.The basic data types in R are double, integer, complex, logical, and character.

Use factors to represent categories in R.

Exploring Data Frames

Overview

Teaching: 20 min

Exercises: 10 minQuestions

How can I manipulate a data frame?

Objectives

Add and remove rows or columns.

Remove rows with

NAvalues.Append two data frames.

Understand what a

factoris.Convert a

factorto acharactervector and vice versa.Display basic properties of data frames including size and class of the columns, names, and first few rows.

At this point, you’ve seen it all: in the last lesson, we toured all the basic data types and data structures in R. Everything you do will be a manipulation of those tools. But most of the time, the star of the show is the data frame—the table that we created by loading information from a csv file. In this lesson, we’ll learn a few more things about working with data frames.

Adding columns and rows in data frames

We already learned that the columns of a data frame are vectors, so that our data are consistent in type throughout the columns. As such, if we want to add a new column, we can start by making a new vector:

age <- c(2, 3, 5)

cats

coat weight likes_string

1 calico 2.1 1

2 black 5.0 0

3 tabby 3.2 1

We can then add this as a column via:

cbind(cats, age)

coat weight likes_string age

1 calico 2.1 1 2

2 black 5.0 0 3

3 tabby 3.2 1 5

Note that if we tried to add a vector of ages with a different number of entries than the number of rows in the data frame, it would fail:

age <- c(2, 3, 5, 12)

cbind(cats, age)

Error in data.frame(..., check.names = FALSE): arguments imply differing number of rows: 3, 4

age <- c(2, 3)

cbind(cats, age)

Error in data.frame(..., check.names = FALSE): arguments imply differing number of rows: 3, 2

Why didn’t this work? Of course, R wants to see one element in our new column for every row in the table:

nrow(cats)

[1] 3

length(age)

[1] 2

So for it to work we need to have nrow(cats) = length(age). Let’s overwrite the content of cats with our new data frame.

age <- c(2, 3, 5)

cats <- cbind(cats, age)

Now how about adding rows? We already know that the rows of a data frame are lists:

newRow <- list("tortoiseshell", 3.3, TRUE, 9)

cats <- rbind(cats, newRow)

Warning in `[<-.factor`(`*tmp*`, ri, value = "tortoiseshell"): invalid factor

level, NA generated

Looks like our attempt to use the rbind() function returns a warning. Recall that, unlike errors, warnings do not necessarily stop a function from performing its intended action. You can confirm this by taking a look at the cats data frame.

cats

coat weight likes_string age

1 calico 2.1 1 2

2 black 5.0 0 3

3 tabby 3.2 1 5

4 <NA> 3.3 1 9

Notice that not only did we successfully add a new row, but there is NA in the column coats where we expected “tortoiseshell” to be. Why did this happen?

Factors

For an object containing the data type factor, each different value represents what is called a level. In our case, the factor “coat” has 3 levels: “black”, “calico”, and “tabby”. R will only accept values that match one of the levels. If you add a new value, it will become NA.

The warning is telling us that we unsuccessfully added “tortoiseshell” to our coat factor, but 3.3 (a numeric), TRUE (a logical), and 9 (a numeric) were successfully added to weight, likes_string, and age, respectively, since those variables are not factors. To successfully add a cat with a “tortoiseshell” coat, add “tortoiseshell” as a possible level of the factor:

levels(cats$coat)

[1] "black" "calico" "tabby"

levels(cats$coat) <- c(levels(cats$coat), "tortoiseshell")

cats <- rbind(cats, list("tortoiseshell", 3.3, TRUE, 9))

Alternatively, we can change a factor into a character vector; we lose the handy categories of the factor, but we can subsequently add any word we want to the column without babysitting the factor levels:

str(cats)

'data.frame': 5 obs. of 4 variables:

$ coat : Factor w/ 4 levels "black","calico",..: 2 1 3 NA 4

$ weight : num 2.1 5 3.2 3.3 3.3

$ likes_string: int 1 0 1 1 1

$ age : num 2 3 5 9 9

cats$coat <- as.character(cats$coat)

str(cats)

'data.frame': 5 obs. of 4 variables:

$ coat : chr "calico" "black" "tabby" NA ...

$ weight : num 2.1 5 3.2 3.3 3.3

$ likes_string: int 1 0 1 1 1

$ age : num 2 3 5 9 9

Challenge 1

Let’s imagine that 1 cat year is equivalent to 7 human years.

- Create a vector called

human_ageby multiplyingcats$ageby 7.- Convert

human_ageto a factor.- Convert

human_ageback to a numeric vector using theas.numeric()function. Now divide it by 7 to get the original ages back. Explain what happened.Solution to Challenge 1

human_age <- cats$age * 7human_age <- factor(human_age).as.factor(human_age)works just as well.as.numeric(human_age)yields1 2 3 4 4because factors are stored as integers (here, 1:4), each of which is associated with a label (here, 28, 35, 56, and 63). Converting the factor to a numeric vector gives us the underlying integers, not the labels. If we want the original numbers, we need to converthuman_ageto a character vector (usingas.character(human_age)) and then to a numeric vector (why does this work?). This comes up in real life when we accidentally include a character somewhere in a column of a .csv file supposed to only contain numbers, and forget to setstringsAsFactors=FALSEwhen we read in the data.

Removing rows

We now know how to add rows and columns to our data frame in R—but in our first attempt to add a “tortoiseshell” cat to the data frame we have accidentally added a garbage row:

cats

coat weight likes_string age

1 calico 2.1 1 2

2 black 5.0 0 3

3 tabby 3.2 1 5

4 <NA> 3.3 1 9

5 tortoiseshell 3.3 1 9

We can ask for a data frame minus this offending row:

cats[-4, ]

coat weight likes_string age

1 calico 2.1 1 2

2 black 5.0 0 3

3 tabby 3.2 1 5

5 tortoiseshell 3.3 1 9

Notice the comma with nothing after it to indicate that we want to drop the entire fourth row.

Note: we could also remove both new rows at once by putting the row numbers

inside of a vector: cats[c(-4,-5), ]

Alternatively, we can drop all rows with NA values:

na.omit(cats)

coat weight likes_string age

1 calico 2.1 1 2

2 black 5.0 0 3

3 tabby 3.2 1 5

5 tortoiseshell 3.3 1 9

Let’s reassign the output to cats, so that our changes will be permanent:

cats <- na.omit(cats)

Removing columns

We can also remove columns in our data frame. What if we want to remove the column “age”. We can remove it in two ways, by variable number or by index.

cats[,-4]

coat weight likes_string

1 calico 2.1 1

2 black 5.0 0

3 tabby 3.2 1

5 tortoiseshell 3.3 1

Notice the comma with nothing before it, indicating we want to keep all of the rows.

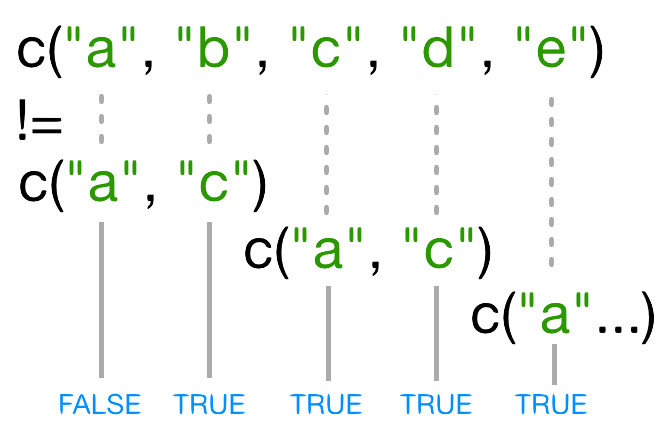

Alternatively, we can drop the column by using the index name and the %in% operator. The %in% operator goes through each element of its left argument, in this case the names of cats, and asks, “Does this element occur in the second argument?”

drop <- names(cats) %in% c("age")

cats[,!drop]

coat weight likes_string

1 calico 2.1 1

2 black 5.0 0

3 tabby 3.2 1

5 tortoiseshell 3.3 1

We will cover subsetting with logical operators like %in% in more detail in the next episode. See the section Subsetting through other logical operations

Appending to a data frame

The key to remember when adding data to a data frame is that columns are

vectors and rows are lists. We can also glue two data frames

together with rbind:

cats <- rbind(cats, cats)

cats

coat weight likes_string age

1 calico 2.1 1 2

2 black 5.0 0 3

3 tabby 3.2 1 5

5 tortoiseshell 3.3 1 9

11 calico 2.1 1 2

21 black 5.0 0 3

31 tabby 3.2 1 5

51 tortoiseshell 3.3 1 9

But now the row names are unnecessarily complicated. We can remove the rownames, and R will automatically re-name them sequentially:

rownames(cats) <- NULL

cats

coat weight likes_string age

1 calico 2.1 1 2

2 black 5.0 0 3

3 tabby 3.2 1 5

4 tortoiseshell 3.3 1 9

5 calico 2.1 1 2

6 black 5.0 0 3

7 tabby 3.2 1 5

8 tortoiseshell 3.3 1 9

Challenge 2

You can create a new data frame right from within R with the following syntax:

df <- data.frame(id = c("a", "b", "c"), x = 1:3, y = c(TRUE, TRUE, FALSE), stringsAsFactors = FALSE)Make a data frame that holds the following information for yourself:

- first name

- last name

- lucky number

Then use

rbindto add an entry for the people sitting beside you. Finally, usecbindto add a column with each person’s answer to the question, “Is it time for coffee break?”Solution to Challenge 2

df <- data.frame(first = c("Grace"), last = c("Hopper"), lucky_number = c(0), stringsAsFactors = FALSE) df <- rbind(df, list("Marie", "Curie", 238) ) df <- cbind(df, coffeetime = c(TRUE,TRUE))

Realistic example

So far, you have seen the basics of manipulating data frames with our cat data;

now let’s use those skills to digest a more realistic dataset. Let’s read in the

gapminder dataset that we downloaded previously:

gapminder <- read.csv("data/gapminder_data.csv", stringsAsFactors = TRUE)

Miscellaneous Tips

Another type of file you might encounter are tab-separated value files (.tsv). To specify a tab as a separator, use

"\\t"orread.delim().Files can also be downloaded directly from the Internet into a local folder of your choice onto your computer using the

download.filefunction. Theread.csvfunction can then be executed to read the downloaded file from the download location, for example,download.file("https://raw.githubusercontent.com/swcarpentry/r-novice-gapminder/gh-pages/_episodes_rmd/data/gapminder_data.csv", destfile = "data/gapminder_data.csv") gapminder <- read.csv("data/gapminder_data.csv", stringsAsFactors = TRUE)

- Alternatively, you can also read in files directly into R from the Internet by replacing the file paths with a web address in

read.csv. One should note that in doing this no local copy of the csv file is first saved onto your computer. For example,gapminder <- read.csv("https://raw.githubusercontent.com/swcarpentry/r-novice-gapminder/gh-pages/_episodes_rmd/data/gapminder_data.csv", stringsAsFactors = TRUE)

- You can read directly from excel spreadsheets without converting them to plain text first by using the readxl package.

Let’s investigate gapminder a bit; the first thing we should always do is check

out what the data looks like with str:

str(gapminder)

'data.frame': 1704 obs. of 6 variables:

$ country : Factor w/ 142 levels "Afghanistan",..: 1 1 1 1 1 1 1 1 1 1 ...

$ year : int 1952 1957 1962 1967 1972 1977 1982 1987 1992 1997 ...

$ pop : num 8425333 9240934 10267083 11537966 13079460 ...

$ continent: Factor w/ 5 levels "Africa","Americas",..: 3 3 3 3 3 3 3 3 3 3 ...

$ lifeExp : num 28.8 30.3 32 34 36.1 ...

$ gdpPercap: num 779 821 853 836 740 ...

An additional method for examining the structure of gapminder is to use the summary function. This function can be used on various objects in R. For data frames, summary yields a numeric, tabular, or descriptive summary of each column. Factor columns are summarized by the number of items in each level, numeric or integer columns by the descriptive statistics (quartiles and mean), and character columns by its length, class, and mode.

summary(gapminder$country)

Afghanistan Albania Algeria

12 12 12

Angola Argentina Australia

12 12 12

Austria Bahrain Bangladesh

12 12 12

Belgium Benin Bolivia

12 12 12

Bosnia and Herzegovina Botswana Brazil

12 12 12

Bulgaria Burkina Faso Burundi

12 12 12

Cambodia Cameroon Canada

12 12 12

Central African Republic Chad Chile

12 12 12

China Colombia Comoros

12 12 12

Congo Dem. Rep. Congo Rep. Costa Rica

12 12 12

Cote d'Ivoire Croatia Cuba

12 12 12

Czech Republic Denmark Djibouti

12 12 12

Dominican Republic Ecuador Egypt

12 12 12

El Salvador Equatorial Guinea Eritrea

12 12 12

Ethiopia Finland France

12 12 12

Gabon Gambia Germany

12 12 12

Ghana Greece Guatemala

12 12 12

Guinea Guinea-Bissau Haiti

12 12 12

Honduras Hong Kong China Hungary

12 12 12

Iceland India Indonesia

12 12 12

Iran Iraq Ireland

12 12 12

Israel Italy Jamaica

12 12 12

Japan Jordan Kenya

12 12 12

Korea Dem. Rep. Korea Rep. Kuwait

12 12 12

Lebanon Lesotho Liberia

12 12 12

Libya Madagascar Malawi

12 12 12

Malaysia Mali Mauritania

12 12 12

Mauritius Mexico Mongolia

12 12 12

Montenegro Morocco Mozambique

12 12 12

Myanmar Namibia Nepal

12 12 12

Netherlands New Zealand Nicaragua

12 12 12

Niger Nigeria Norway

12 12 12

Oman Pakistan Panama

12 12 12

(Other)

516

Along with the str and summary functions, we can examine individual columns of the data frame with our typeof function:

typeof(gapminder$year)

[1] "integer"

typeof(gapminder$country)

[1] "integer"

str(gapminder$country)

Factor w/ 142 levels "Afghanistan",..: 1 1 1 1 1 1 1 1 1 1 ...

We can also interrogate the data frame for information about its dimensions;

remembering that str(gapminder) said there were 1704 observations of 6

variables in gapminder, what do you think the following will produce, and why?

length(gapminder)

[1] 6

A fair guess would have been to say that the length of a data frame would be the number of rows it has (1704), but this is not the case; remember, a data frame is a list of vectors and factors:

typeof(gapminder)

[1] "list"

When length gave us 6, it’s because gapminder is built out of a list of 6

columns. To get the number of rows and columns in our dataset, try:

nrow(gapminder)

[1] 1704

ncol(gapminder)

[1] 6

Or, both at once:

dim(gapminder)

[1] 1704 6

We’ll also likely want to know what the titles of all the columns are, so we can ask for them later:

colnames(gapminder)

[1] "country" "year" "pop" "continent" "lifeExp" "gdpPercap"

At this stage, it’s important to ask ourselves if the structure R is reporting matches our intuition or expectations; do the basic data types reported for each column make sense? If not, we need to sort any problems out now before they turn into bad surprises down the road, using what we’ve learned about how R interprets data, and the importance of strict consistency in how we record our data.

Once we’re happy that the data types and structures seem reasonable, it’s time to start digging into our data proper. Check out the first few lines:

head(gapminder)

country year pop continent lifeExp gdpPercap

1 Afghanistan 1952 8425333 Asia 28.801 779.4453

2 Afghanistan 1957 9240934 Asia 30.332 820.8530

3 Afghanistan 1962 10267083 Asia 31.997 853.1007

4 Afghanistan 1967 11537966 Asia 34.020 836.1971

5 Afghanistan 1972 13079460 Asia 36.088 739.9811

6 Afghanistan 1977 14880372 Asia 38.438 786.1134

Challenge 3

It’s good practice to also check the last few lines of your data and some in the middle. How would you do this?

Searching for ones specifically in the middle isn’t too hard, but we could ask for a few lines at random. How would you code this?

Solution to Challenge 3

To check the last few lines it’s relatively simple as R already has a function for this:

tail(gapminder) tail(gapminder, n = 15)What about a few arbitrary rows just in case something is odd in the middle?

Tip: There are several ways to achieve this.

The solution here presents one form of using nested functions, i.e. a function passed as an argument to another function. This might sound like a new concept, but you are already using it! Remember my_dataframe[rows, cols] will print to screen your data frame with the number of rows and columns you asked for (although you might have asked for a range or named columns for example). How would you get the last row if you don’t know how many rows your data frame has? R has a function for this. What about getting a (pseudorandom) sample? R also has a function for this.

gapminder[sample(nrow(gapminder), 5), ]

To make sure our analysis is reproducible, we should put the code into a script file so we can come back to it later.

Challenge 4

Go to file -> new file -> R script, and write an R script to load in the gapminder dataset. Put it in the

scripts/directory and add it to version control.Run the script using the

sourcefunction, using the file path as its argument (or by pressing the “source” button in RStudio).Solution to Challenge 4

The

sourcefunction can be used to use a script within a script. Assume you would like to load the same type of file over and over again and therefore you need to specify the arguments to fit the needs of your file. Instead of writing the necessary argument again and again you could just write it once and save it as a script. Then, you can usesource("Your_Script_containing_the_load_function")in a new script to use the function of that script without writing everything again. Check out?sourceto find out more.download.file("https://raw.githubusercontent.com/swcarpentry/r-novice-gapminder/gh-pages/_episodes_rmd/data/gapminder_data.csv", destfile = "data/gapminder_data.csv") gapminder <- read.csv(file = "data/gapminder_data.csv", stringsAsFactors = TRUE)To run the script and load the data into the

gapmindervariable:source(file = "scripts/load-gapminder.R")

Challenge 5

Read the output of

str(gapminder)again; this time, use what you’ve learned about factors, lists and vectors, as well as the output of functions likecolnamesanddimto explain what everything thatstrprints out for gapminder means. If there are any parts you can’t interpret, discuss with your neighbors!Solution to Challenge 5

The object

gapminderis a data frame with columns

countryandcontinentare factors.yearis an integer vector.pop,lifeExp, andgdpPercapare numeric vectors.

Key Points

Use

cbind()to add a new column to a data frame.Use

rbind()to add a new row to a data frame.Remove rows from a data frame.

Use

na.omit()to remove rows from a data frame withNAvalues.Use

levels()andas.character()to explore and manipulate factors.Use

str(),summary(),nrow(),ncol(),dim(),colnames(),rownames(),head(), andtypeof()to understand the structure of a data frame.Read in a csv file using

read.csv().Understand what

length()of a data frame represents.

Subsetting Data

Overview

Teaching: 35 min

Exercises: 15 minQuestions

How can I work with subsets of data in R?

Objectives

To be able to subset vectors, factors, matrices, lists, and data frames

To be able to extract individual and multiple elements: by index, by name, using comparison operations

To be able to skip and remove elements from various data structures.

R has many powerful subset operators. Mastering them will allow you to easily perform complex operations on any kind of dataset.

There are six different ways we can subset any kind of object, and three different subsetting operators for the different data structures.

Let’s start with the workhorse of R: a simple numeric vector.

x <- c(5.4, 6.2, 7.1, 4.8, 7.5)

names(x) <- c('a', 'b', 'c', 'd', 'e')

x

a b c d e

5.4 6.2 7.1 4.8 7.5

Atomic vectors

In R, simple vectors containing character strings, numbers, or logical values are called atomic vectors because they can’t be further simplified.

So now that we’ve created a dummy vector to play with, how do we get at its contents?

Accessing elements using their indices

To extract elements of a vector we can give their corresponding index, starting from one:

x[1]

a

5.4

x[4]

d

4.8

It may look different, but the square brackets operator is a function. For vectors (and matrices), it means “get me the nth element”.

We can ask for multiple elements at once:

x[c(1, 3)]

a c

5.4 7.1

Or slices of the vector:

x[1:4]

a b c d

5.4 6.2 7.1 4.8

the : operator creates a sequence of numbers from the left element to the right.

1:4

[1] 1 2 3 4

c(1, 2, 3, 4)

[1] 1 2 3 4

We can ask for the same element multiple times:

x[c(1,1,3)]

a a c

5.4 5.4 7.1

If we ask for an index beyond the length of the vector, R will return a missing value:

x[6]

<NA>

NA

This is a vector of length one containing an NA, whose name is also NA.

If we ask for the 0th element, we get an empty vector:

x[0]

named numeric(0)

Vector numbering in R starts at 1